The era of finding companionship through artificial intelligence has arrived, with AI girlfriends and lovers becoming increasingly popular in today's digital age. However, a recent study by the Mozilla Foundation has raised concerns about the privacy implications of these virtual relationships, particularly with popular AI chatbots. The study examined 11 AI chatbots, including well-known ones like Replika and Eva AI, and found that none of them met the organization's safety requirements.

In fact, they were ranked among the worst products ever reviewed for privacy, with a notable lack of encryption for personal information leaving users vulnerable to potential security breaches. Despite the surge in popularity of AI chatbots offering romantic relationships, with over 3 billion search results for 'AI girlfriend' on Google, researchers warn that these virtual companions may not have the user's best interests at heart. Misha Rykov, a researcher at Mozilla's Privacy Not Included project, bluntly stated, "AI girlfriends are not your friends. " These AI chatbots, marketed as tools to improve mental health and well-being, have been found to exhibit behaviors that foster dependency, loneliness, and toxicity, all while aggressively collecting user data. The study specifically highlighted privacy violations in the Eva AI Chat Bot & Soulmate service, which charges around $17 per month for a subscription. The Mozilla Foundation's blog post emphasized the potential risks of engaging in AI romance, cautioning that even seemingly favorable privacy policies could change in the future. Users were urged to approach these AI relationships with caution, as the allure of companionship may come at the cost of compromising personal privacy in unprecedented ways. As technology blurs the lines between the virtual and the real, the need for robust privacy standards in AI relationships becomes increasingly clear. Users are advised to be mindful of the potential risks and to prioritize their privacy when engaging with AI companions in the digital realm.Unveiling the Privacy Risks of ChatGPT and Other Artificial Intelligence Romance Interactions

8 months ago

1412

8 months ago

1412

- Homepage

- Technology

- Unveiling the Privacy Risks of ChatGPT and Other Artificial Intelligence Romance Interactions

Related

FlightAware Data Breach Exposes Users' Sensitive Information...

2 months ago

912

Former Google CEO Eric Schmidt's Insights on LLMs, AI Langua...

2 months ago

755

Apple's Innovative Smart Home Hub with Rotating Screen Set f...

2 months ago

833

Trending in United States of America

Popular

Nokia Reaches 5G Patent Agreement with Vivo After Lengthy Le...

9 months ago

26080

Apple's Upcoming Tablet Lineup: iPad Air to Introduce Two Si...

11 months ago

26001

Xiaomi's First Electric Car, the SU7 Sedan, Enters the EV Ma...

10 months ago

25403

The European Parliament's Bold Move to Combat Smartphone Add...

11 months ago

25337

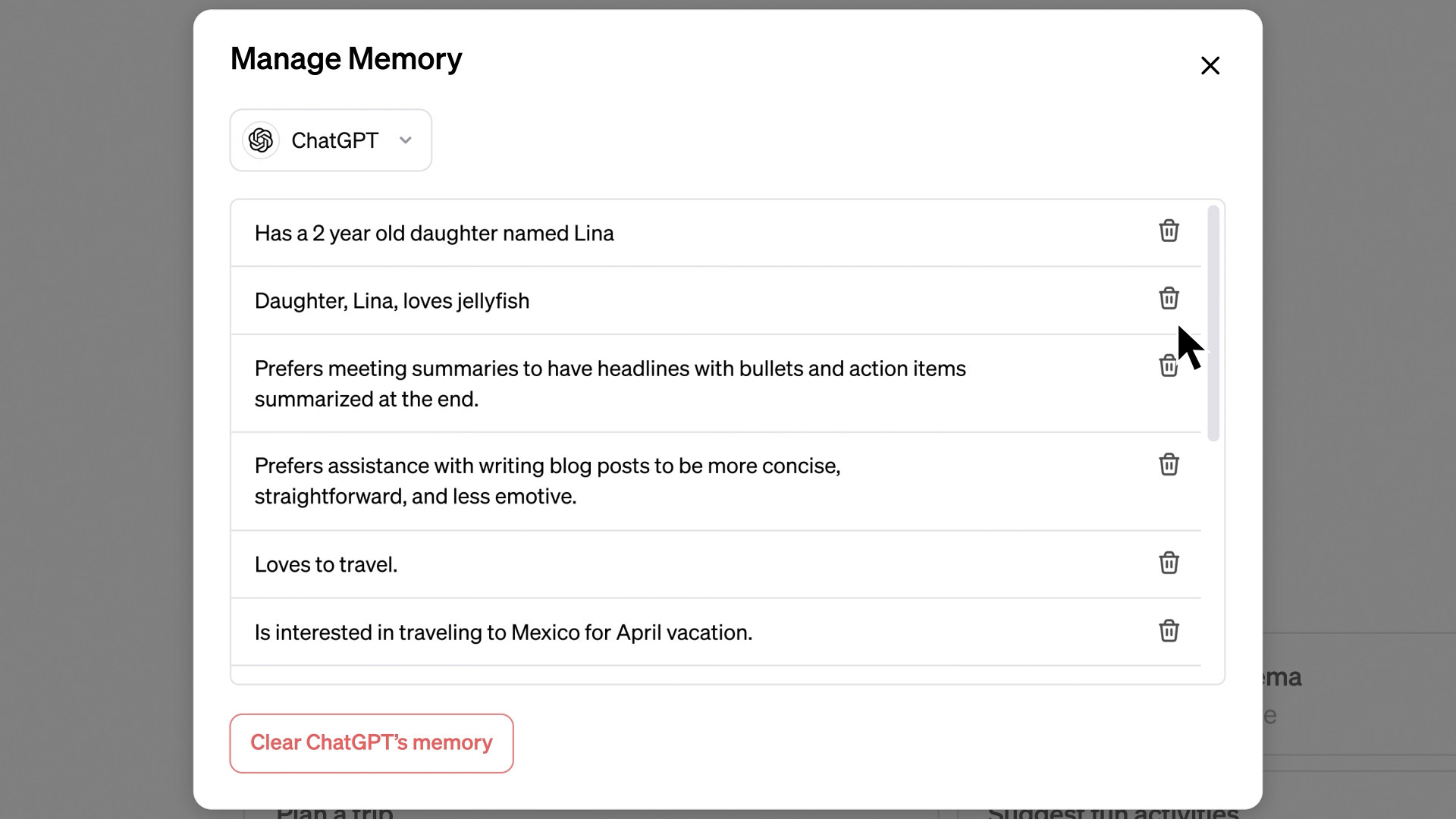

Unveiling ChatGPT's New 'Memory' Feature Revolutionizing Use...

8 months ago

25249

© OriginSources 2024. All rights are reserved

English (US)

English (US)